Ever run into a problem where an application's Master is accepting all the writes and the master's slaves lags? Ever had this same problem yet the Slave has a raid array of very fast SSDs? You must be thinking to yourself, "I call BS, Dathan; a SSD drive can do crazy IOPS". No, it's true and the reason is that mySQL only has two threads for replication.

Thread 1 is called the IO thread and its job is to download the binary logs from the server's master into relay logs on the slave. This is rarely a bottleneck since this is typically sequential disk access. Thread 2 is called the SQL thread. It's job is to take the data which was downloaded from the IO thread and apply it. Since there is 1 thread applying the SQL and that 1 thread has to read each row before writing it (that is just how a write works), that 1 Thread just couldn't move fast enough or use enough resources by itself and lags.

Here are my findings. The master was producing 8-10K IOPS of write traffic, yet with a single mySQL thread, it could only do 2K-2.5K IOPS with a bunch of tweaks and tunable I happen to know about.

Below is a graph

This is a graph of IOPS on a slave. From Sep to Dec, I wasted IOPS on such beautiful hardware with a single replication thread. My beliefe is if you're not utilizing 100% of your hardware resources where 100% is defined as the resources not reserved for growth you are wasting hardware. I needed more threads to keep writes caught up was my conclusion and my thought was I will either need to write my own replication layer which pushes data OR do something that just solves a bunch of problems. Multi-mySQL-Server Instance on a single Server is my solution to utilize more IOPS.

For this application, I need fast IOPS and a lot of disk-space. This particular application counts all the page views, records referrers, records uniques, etc for millions of sites across the web realtime. The owners or blessed administrators of the site are the only one that see detail traffic stats. The application produces roughly 6K-12K inserts a second. Also the data exists for at least two years and many readers of the data could be required in the future.

My approach to solve the application issues are the following.

- Analyze the data. How is it written?

- How many IOPS are produced today?

- Will the IOP request rate grow over time?

- Is the query pattern mainly inserts, updates, selects or all of the above?

Upon analysis, I found that there is a lot of data, mainly new entries (rows) are written throughout the day. I found that traffic in terms of inserts are basically a function of viewed pages for a particular site. It just makes sense-more views more writes, thus more inserts. Since I know I need more IOPS on the slave I need more replication threads. I need the ability to grow the number of replication threads over time. Also since data just grows I need to be able to grow disk space over time as well.

So I said what if I took the hostname converted it to a bigint and assigned it a database. I can in theory have a server per database. This will allow me to grow to at least 1 write server per hostname. Sharding is yet born again but within a single server ;).

Since 1 thread of SQL replication can use up to 2.5K IOPS, let's group all the hostnames into buckets/databases (note buckets and databases from this point is the same thing). Below is an image of grouping hosts into 28 buckets by the number of pageviews. I used a random distribution by taking 500K+ sites and summing their page-views.

So I said what if I took the hostname converted it to a bigint and assigned it a database. I can in theory have a server per database. This will allow me to grow to at least 1 write server per hostname. Sharding is yet born again but within a single server ;).

Since 1 thread of SQL replication can use up to 2.5K IOPS, let's group all the hostnames into buckets/databases (note buckets and databases from this point is the same thing). Below is an image of grouping hosts into 28 buckets by the number of pageviews. I used a random distribution by taking 500K+ sites and summing their page-views.

Here we see that there is a pretty good distribution of pageviews per bucket except for the two major spikes. Thus isolating this grouping of very popular sites to a 4 more buckets gave it the same flat-ish type graph. Now I have 32 buckets to handle all the sites.

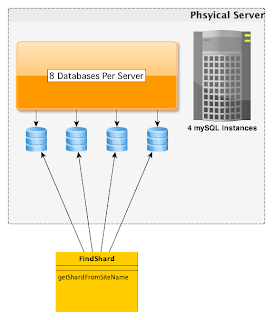

From other benchmarks done and not in this scope my benchmark-data points to a single server doing 40K IOPS+ on 1TB of Raid-5 SSD. Taking the 32 buckets where each bucket is now a database I assign them to 4 mySQL servers. So each server has 8 databases. 4 mysql servers == 4 replication threads. This should give me 10K IOPS no problem thus keeping up with the master. Additionally not confuse people that are use to connecting to mySQL from the command line the multi-mySQL server config starts at port 3307 and ends at port 3310.

The Diagram above shows how the Application picks which server by hitting a specific port on the Physical Server. Based on the server name I know what mySQL server and port to hit. Let's delve more into this.

Slowing down the writing to the master is a no-no. Adding a network lookup for every hostname stat-type to figure out what bucket said hostname belongs to is not efficient. The application only knows the fully qualified domain name thus passing a numerical representation of the hostname is not possible.

To get around this I use consistent hashing-a calculation inside application space which converts a hostname to a numerical bigint representation. This numerical representation from the consistent hash allows me to build a range of ids to assign to a database. This approach allows me to move a block of hosts where a block can be a single host to another arbitrary bucket allowing growth past 32 databases.

if ( $id < 91613391575) return 1;

if ( $id >= 91613391575 && $id < 575617478762710049) return 1;

if ( $id >= 575617478762710049 && $id < 1151652970532347073) return 2;

if ( $id >= 1151652970532347073 && $id < 1728381697671314740) return 3;

if ( $id >= 1728381697671314740 && $id < 2305255992978150432) return 4;

if ( $id >= 2305255992978150432 && $id < 2879934343862663299) return 5;

if ( $id >= 2879934343862663299 && $id < 3456586097308066472) return 6;

if ( $id >= 3456586097308066472 && $id < 4034127590667991228) return 7;

if ( $id >= 4034127590667991228 && $id < 4610886495740908215) return 8;

if ( $id >= 4610886495740908215 && $id < 5188089445597040650) return 9;

if ( $id >= 5188089445597040650 && $id < 5764363206983886628) return 10;

if ( $id >= 5764363206983886628 && $id < 6340149158128856460) return 11;

if ( $id >= 6340149158128856460 && $id < 6917639753751591942) return 12;

if ( $id >= 6917639753751591942 && $id < 7494903696463075964) return 13;

if ( $id >= 7494903696463075964 && $id < 8070423312614934443) return 14;

if ( $id >= 8070423312614934443 && $id < 8645754150017603414) return 15;

if ( $id >= 8645754150017603414 && $id < 9222136639934948903) return 16;

if ( $id >= 9222136639934948903 && $id < 9797731625031589702) return 17;

if ( $id >= 9797731625031589702 && $id < 10374872366398685937) return 18;

if ( $id >= 10374872366398685937 && $id < 10951636964146180677) return 19;

if ( $id >= 10951636964146180677 && $id < 11527508592358295485) return 20;

if ( $id >= 11527508592358295485 && $id < 12104380263636744674) return 21;

if ( $id >= 12104380263636744674 && $id < 12682234005971217988) return 22;

if ( $id >= 12682234005971217988 && $id < 13259221689642660934) return 23;

if ( $id >= 13259221689642660934 && $id < 13836008077021721634) return 24;

if ( $id >= 13836008077021721634 && $id < 14411538489601958181) return 25;

if ( $id >= 14411538489601958181 && $id < 14989084168978764931) return 26;

if ( $id >= 14989084168978764931 && $id < 15565313755211130708) return 27;

if ( $id >= 15565313755211130708 && $id < 16142081929461448429) return 28;

if ( $id >= 16142081929461448429 && $id < 16717869929339795988) return 29;

if ( $id >= 16717869929339795988 && $id < 17293687793251719004) return 30;

if ( $id >= 17293687793251719004 && $id < 17869194657238150155) return 31;

if ( $id >= 17869194657238150155 && $id < 18446689638439209978) return 32;

return 32;

Looking at these number, you can see that at times they are separated by 1 trillion or so. This doesn't mean there is 1 trillion sites, it just means that 1 trillion sites could clump up in a bucket. I am not concern about that. I can take say 100 billion of a bucket/database, move the data to another database say bucket/database 33 without a problem. I of course would need to let the users know that their data is unavailable during this move for the sites that fall with-in the 100 billion, but moving data is fast per site-so they will not be too inconvenienced.

Also you might wonder since a hash is being used, what if two distinct hostnames hash to the same id? Its pretty unlikely but if that is the case, the Primary KEY on the table is siteIdHash, Hostname.

In conclusion this is working great. With each ETL running on each webserver 2 Servers Handle the stats for 10s of millions of websites with the data always up to date and the redundant server has 0 seconds of replication lag at all times.

No comments:

Post a Comment